Yes, its been a looong time.

So, let me get started with the first item on the menu. I promised to write a "SIMD" version of the diffraction code. It turns out that CUDA gpus do not do 4 wide vector operations (according to a reasonably reliable source). I myself have found no reference to vectorized ALU in the GPU, and all references to SIMD on GPU point to warps, which are in some sense, single instruction multiple data units. So I am now to assume that we can only do scalar operations on each shader unit.

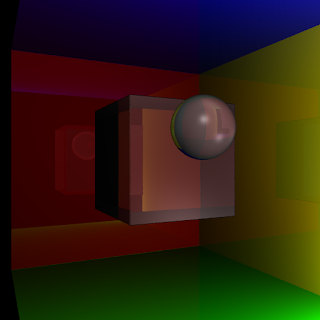

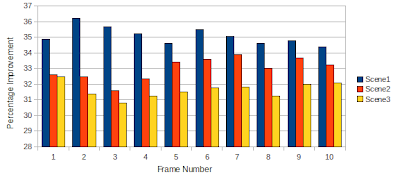

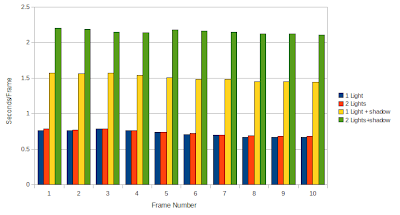

It turns out that we can nevertheless try to squeeze out the requisite performance from CUDA. The GLSL implementation of diffraction through a 256x256 diffraction grating runs in around 5 seconds. It turns out that if we do a single float4 texture read in each iteration of the kernel, and write a float4 at a time to the output buffer, we can save quite some time in the kernel.

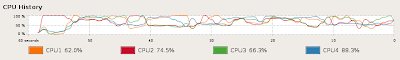

The earlier implementations took at best 16.5 seconds to complete the process. The new implementation now just takes 5.6 seconds of which the actual system time is just 4.5 seconds.

Pitfalls:

It turns out that not all is well on the CUDA compiler front. I ran into multiple bugs while implementing this last optimization.

Firstly, I was unable to pass a particular float argument from the cpu code through the kernel, and into 2 device functions. It simply kept mucking up each and every time. I finally gave up and hard-coded that value into the kernel. Later I found that the bug was gone in CUDA 2.3. Not really sure what the bug was, but it may have been related to this next issue.

The CUDA compiler seems to really mess up if you do not EXPLICITLY specify each constant floating point value as single precision (0.65f instead of 0.65). It once in a while throws a warning about 'Double' not being supported. You won't even know where this is because the line number is from the ptx file. Heed this warning and remove all doubles from your code (if you are using g80-g92 that is). These innocuous warnings actually come with drastic side effects.

For example, I had defined a pure function which had a few floating point constants inside it. A single invocation of this function, per iteration in the kernel, would work fine. But 2 or 3 invocations would result in an abrupt return from the kernel, so no processing would be done at all.

Here is the kernel code (brought to you by the magic of vim and :TOhtml)

__global__ void gpu_emit_photons(float4* buffer, int inputRow, int inputCol, float pitch)

{

const int tid_x = threadIdx.x+blockDim.x*blockIdx.x;//destination col

const int tid_y = threadIdx.y+blockDim.y*blockIdx.y;//destination row

float srcPosX, srcPosY, destPosX, destPosY, deltaPosX, deltaPosY;

const float scrSize_2_inv = 2.0f/screenSize;

const float lambdaRed = 0.72f, lambdaGreen = 0.53f, lambdaBlue = 0.47f;

float theta, phi, uRed, vRed, uGreen, vGreen, uBlue, vBlue;

int i,j;

float4 intensity;

float tempIntensityR = 0.0f, tempIntensityG = 0.0f, tempIntensityB = 0.0f;

for (i=inputRow; i<inputRow+BUCKET_SIZE; i++){

for (j=inputCol; j<inputCol+BUCKET_SIZE; j++){

float rand1= -tex2D(texRef1, i, j);

float rand2= -tex2D(texRef1, i, j+1);

intensity = tex2D(texRef2, (float)j+rand1, (float)i+rand2);

srcPosX=posMax*(1.0f- ((float)i+rand1)*scrSize_2_inv);

srcPosY=posMax*(1.0f- ((float)j+rand2)*scrSize_2_inv);

destPosX=posMax*(1.0f-(float)tid_x*scrSize_2_inv);

destPosY=posMax*(1.0f-(float)tid_y*scrSize_2_inv);

//deltaPos is the difference between x coordinates of src and dest

deltaPosX=srcPosX-destPosX;

deltaPosY=srcPosY-destPosY;

theta = atan2( deltaPosX,screenDistance);

phi = atan2( deltaPosY,screenDistance);

uRed = theta/lambdaRed; vRed = phi/lambdaRed;

uGreen = theta/lambdaGreen; vGreen = phi/lambdaGreen;

uBlue = theta/lambdaBlue; vBlue = phi/lambdaBlue;

//Assume light is incident perpendicularly onto the optical element;

//In the general case, 'u' becomes u-u_input and 'v' becomes v-v_input, where u_input and v_input are angles of incidence

tempIntensityR += wdf_analytical(srcPosX,srcPosY,uRed,vRed,lambdaRed,pitch) * intensity.x;

tempIntensityG += wdf_analytical(srcPosX,srcPosY,uGreen,vGreen,lambdaGreen,pitch) * intensity.y;

tempIntensityB += wdf_analytical(srcPosX,srcPosY,uBlue,vBlue,lambdaBlue,pitch) * intensity.z;

}

}

buffer[tid_y + tid_x*SIZE] = buffer[tid_y + tid_x*SIZE] + make_float4(tempIntensityR,tempIntensityG,tempIntensityB,0);

}

In the above code, the function call of interest is wdf_analytical(...). This function evaluates the wigner distribution value at the specified x,y,theta,phi coordinates. Its like a 4d lookup table. We need to invoke this function once for each wavelength of light that we want to handle (here, red, green, blue). It turns out that the simple double to float warning had some unforseen ramifications in terms of crashing my code when the function was called more than once. For a single invocation, it worked like a charm.

Well, now of course, everything is in order, and the code works quite fast. The time for optimizations has almost come to an end. One further optimization I should do before closing the topic however, is to use the Leapfrog Lagged Fibonacci Generator (LLFG random number generator) which a bunch of clever guys at my lab have coded up in CUDA. They claim its the world's fastest random number generator (faster than Mersenne Twister on CUDA). It may give good performance benefits versus using a random texture. Only (a lot of) time will tell...